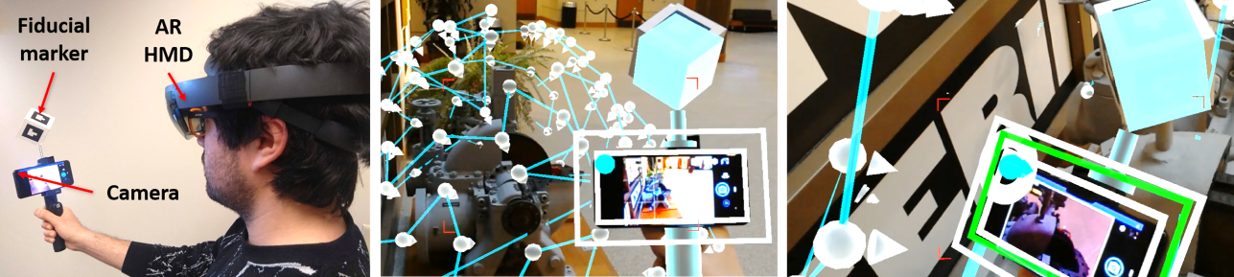

AR HMD Guidance for Controlled Hand-Held 3D Acquisition

Published:

Presented at ISMAR 2019

Published:

Presented at ISMAR 2019

Published:

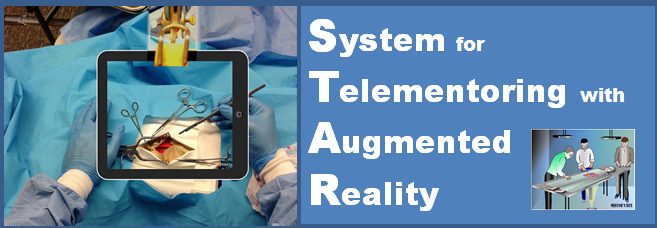

A multi-year interdisciplinary research project to improve surgical performance in austere environments using augmented reality

Published:

Final project for Purdue CS535

Published:

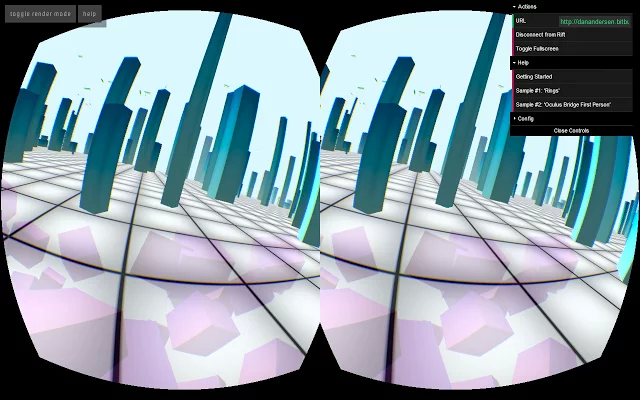

Cupola VR Viewer for Oculus Rift Head-Tracking in WebGL Virtual Environments

Published:

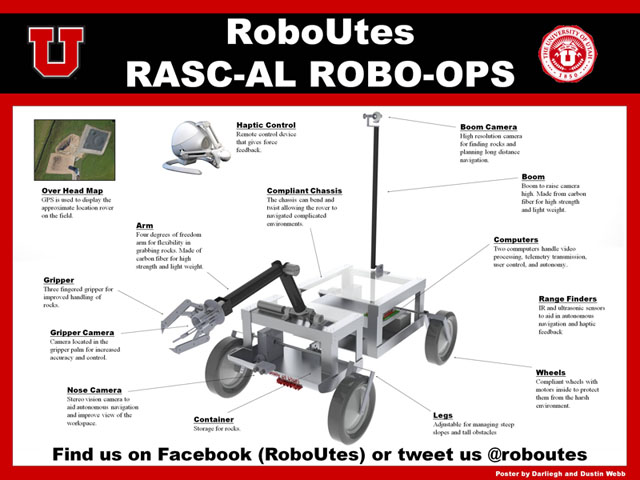

Mars rover competition